Instagram has just announced that it is introducing a new feature that would allow users to filter words in comments, thus making the photo-sharing platform safer for all users. The feature has already started rolling out.

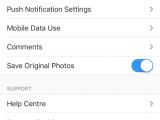

Instagram officially disclosed that it added a keyword moderation tool that anyone can use. When users tap on the gear icon of their profiles, they can find a new Comments tool. The feature allows them to list words they may consider offensive or inappropriate.

Afterward, the feature will automatically hide comments that contain such words. Users have the option to choose their own list of words or use the default words that Instagram provided.

Instagram already has plenty of other tools for managing comments, including one that allows users to swipe to delete comments, report inappropriate comments, or even block accounts.

“The beauty of the Instagram community is the diversity of its members. All different types of people - from diverse backgrounds, races, genders, sexual orientations, abilities and more - call Instagram home, but sometimes the comments on their posts can be unkind,” said Kevin Systrom, Instagram co-founder and CEO, on the company’s blog.

Additionally, Instagram is rolling out a new feature that would show more relevant and personalized comments in the comments preview, instead of the most recent comments.

Just recently, Instagram announced another new feature that would allow the app to suggest stories based on user preferences. The recommendations will be based on followed users and topics that they’re interested in.

14 DAY TRIAL //

14 DAY TRIAL //